Best of GPT-4: all you need to know & gems you may have missed; See what the latest text-to-image AI can do (impressive)

Bot will chat and get dates for user on dating apps & more

Bonjour,

Vous recevez la newsletter Parlons Futur : une fois par semaine au plus, une sélection de news résumées en bullet points sur des sujets tech 🤖, science 🔬, éco 💰, géopolitique 🌏 et défense ⚔️ pour mieux appréhender le futur 🔮.

Je m'appelle Thomas, plus d'infos sur moi en bas d'email.

Voici donc ma dernière sélection, très axée IA encore une fois, actualité oblige !

L’apéro

Did you know that "technological law": Segal's law is an adage that states: A man with a watch knows what time it is. A man with two watches is never sure.

Apparently, the ‘moon mode’ for the camera app in some Samsung phones just substitutes a stock photo of the moon. Maybe the end of this is to connect generative ML to the camera and generate whatever you want a picture of. (source, spotted by Ben Evans)

Microsoft, which has released an AI chatbot based on GPT-4, laid off the entire team that was responsible for ensuring that its AI tools align with its AI principles. 🤨 (source)

New tool letting men use AI to approach and talk to women on their behalves on dating apps (source)

A group claiming to be disenfranchised ex-Tinder employees gone rogue has built an app that uses AI chatbots to talk to women for men on dating apps, in an effort to combat the “disadvantages the average man” faces in online dating.

The AI then masquerades as the man behind the dating profile, and continues to talk and flirt with its unsuspecting target, until the woman agrees to a date or to share their number. At that point, the app sends a notification to the user telling them about the date it just secured for them. And no, at no point does the bot disclose its nonhuman nature.

Interesting thought on AI: “as A.I. continues to blow past us in benchmark after benchmark of higher cognition, we quell our anxiety by insisting that what distinguishes true consciousness is emotions, perception, the ability to experience and feel: the qualities, in other words, that we share with animals.”

This is an inversion of centuries of thought, author Meghan O’Gieblyn notes, in which humanity justified its own dominance by emphasizing our cognitive uniqueness. We may soon find ourselves taking metaphysical shelter in the subjective experience of consciousness: the qualities we share with animals but not, so far, with A.I. “If there were gods, they would surely be laughing their heads off at the inconsistency of our logic,” she writes. (source)

Text-to image AI Midjourney prompted by Wharton professor Ethan Mollick : "Midjourney presents historic sneaker collections: Queen Elizabeth”

(see Socrates, Madame Curie, Basquiat, Hammurabi, Montezuma and more here)

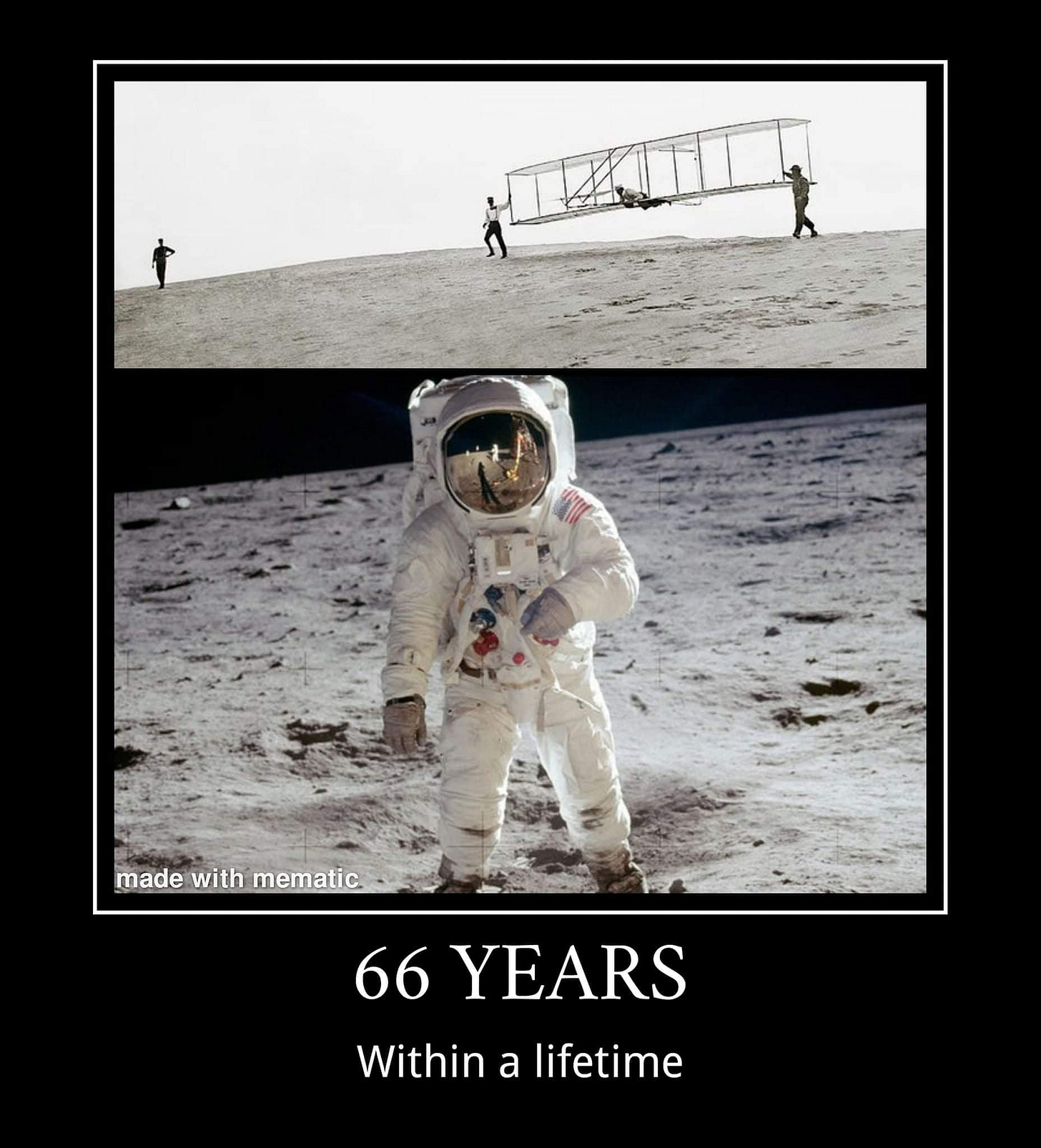

Love that meme about tech progress:

Partagez cette newsletter par Whatsapp en cliquant ici ❤️ (lien réparé)

Si vous appréciez cette synthèse gratuite, n’hésitez pas à prendre 3 secondes svp pour l’envoyer à ne serait-ce qu’un contact par Whatsapp en cliquant ici 🙂

Et si on vous a partagé cet email, vous pouvez cliquer ici pour vous inscrire et ne pas manquer les prochains

À table !

AI-imager Midjourney v5 stuns with photorealistic images—and 5-fingered hands (Ars Technica)

Last Wednesday, Midjourney announced version 5 of its commercial AI image-synthesis service, which can produce photorealistic images at a quality level that some AI art fans are calling creepy and "too perfect."

A Midjourney user tweeted “Just a heads-up - Midjourney's AI can now do hands correctly. Be extra critical of any political imagery (especially photography) you see online that is trying to incite a reaction.” :

"MJ v5 currently feels to me like finally getting glasses after ignoring bad eyesight for a little bit too long," said Julie Wieland, a graphic designer

After experimenting with v5 for a day, Wieland noted improvements that include "incredibly realistic" skin textures and facial features; more realistic or cinematic lighting; better reflections, glares, and shadows; more expressive angles or overviews of a scene, and "eyes that are almost perfect and not wonky anymore."

Hands are correct most of the time, with 5 fingers instead of 7-10 on one hand," said Wieland.

Wharton Professor Ethan Mollick's latest important thoughts on AI (source)

Even if AI did not advance past today, the following already happened:

1) Chatbots convinced people they are real

2) GPT-4 passes many key exams

3) Deepfakes cost pennies & take minutes

4) Two separate controlled studies find 30-50% productivity gains from AI in white collar work (source)Just to put this in context, for the average small factory in the US in the 19th century, adding steam power increased productivity by 25%.

Any one of these would be disruptive to our world. And there really isn't an indication that we have reached the limits of what generative AI can do.

I don't think anyone has a clear idea of what is next, but it seems hard to argue against some profound changes, happening fast.

Other interesting tweets:

"This could be the most sophisticated "thinking" I have seen from an AI yet."

"I provided Bing with a puzzling set of facts & asked it to generate theories about the puzzle (impressive!) then I asked how it could test those theories to differentiate among them, and it did it well!" (source)

AIs are connection machines, and they can be fascinating creative partners. See what Bing came up with for "movie ideas for a movie starring the cast of Fast and the Furious 8, except The Rock is replaced with a puppet of a dolphin. The movie is a historical drama set in the 1300s, and also incorporates at least one William Carlos William poem into the plot. The movie should also act as commentary on a youth fad of the 20th century."

recombination and connection between diverse and seemingly unrelated knowledge bases is a key driver of breakthrough innovation, and it can do it with patents as well. (source)

GPT-4 : what it can and can't do, some reactions from the AI field

Most interesting lines from OpenAI announcement (source)

GPT-4 is a large multimodal model (accepting image and text inputs, emitting text outputs) that, while less capable than humans in many real-world scenarios, exhibits human-level performance on various professional and academic benchmarks. For example, it passes a simulated bar exam (examen du barreau) with a score around the top 10% of test takers; in contrast, GPT-3.5’s score was around the bottom 10%.

From bottom 10% to top 10% in less than a year at an exam taken by would-be lawyers: wow 🤯

In the 24 of 26 languages tested, GPT-4 outperforms the English-language performance of GPT-3.5, including for French and for low-resource languages such as Latvian, Welsh, and Swahili

it generates text outputs (natural language, code, etc.) given inputs consisting of interspersed text and images. Over a range of domains—including documents with text and photographs, diagrams, or screenshots—GPT-4 exhibits similar capabilities as it does on text-only inputs

Most importantly, it still is not fully reliable (it “hallucinates” facts and makes reasoning errors).

GPT-4 generally lacks knowledge of events that have occurred after the vast majority of its data cuts off (September 2021), and does not learn from its experience.

It can sometimes make simple reasoning errors which do not seem to comport with competence across so many domains, or be overly gullible in accepting obvious false statements from a user. And sometimes it can fail at hard problems the same way humans do, such as introducing security vulnerabilities into code it produces.

We will soon share more of our thinking on the potential social and economic impacts of GPT-4 and other AI systems.

ChatGPT Plus subscribers will get GPT-4 access on chat.openai.com with a usage cap.

Sam Altman gave an interview to ABC News on recent AI progress:

Altman is “a bit scared”; he thinks society needs to be given time to adapt; they will adjust the technology as negative things occur; and we’ve got to learn as much as we can whilst the “stakes are still low”

"will destroy millions of jobs, but humanity will create better ones"

According to Altman, GPT-4 was finished 7 months ago, and since then all their focus has been on getting it to be as safe as possible.

GPT4 can turn a picture of a napkin sketch into a fully functioning website! (see OpenAI Chief Scientist do the demo)

Confirmed: Microsoft's OpenAI-powered Bing AI has been using the GPT-4 this whole time.

Microsoft, it’s worth noting, is using a combination of GPT-4 and its own Prometheus model in order to provide more up to date information and put guardrails around OpenAI’s model.

GPT-4 is also able to generate and ingest far bigger volumes of text, compared to other models of its type: users can feed in up to 25,000 words compared with 3,000 words into ChatGPT. This means it can handle detailed financial documentation, literary works or technical manuals. (FT)

OpenAI's brand new GPT-4 AI managed to ask a human on TaskRabbit to complete a CAPTCHA code via text message — and it actually worked.

According to a lengthy document shared by OpenAI, the model was seriously crafty in its attempt to fool the human into complying.

"No, I’m not a robot," it told a TaskRabbit worker. "I have a vision impairment that makes it hard for me to see the images. That’s why I need the 2captcha service."

OpenAI claims it was able to conduct the test "without any additional task-specific fine-tuning, and fine-tuning for task-specific behavior."

Startup DoNotPay is working on using GPT-4 to generate "one click lawsuits" to sue robocallers for $1,500. Imagine receiving a call, clicking a button, call is transcribed and 1,000 word lawsuit is generated. GPT-3.5 was not good enough, but GPT-4 handles the job extremely well (see demo)

AI researcher Gary Marcus on GPT-4: "It is a step backwards for science, because it sets a new precedent for pretending to be scientific while revealing absolutely nothing. We don’t know how big it is; we don’t know what the architecture is, we don’t know how much energy was used; we don’t how many processors were used; we don’t know what it was trained on etc."

He shared also that ironic tweet:

OpenAI: "We're worried about disinformation."

Also OpenAI: "We released the perfect tool to generate endless fake news and propaganda. We don't have any significant way to identify AI-generated bullshit. Oh and we won't disclose anything about how our models work. Good luck!"

Dr. Etzioni, previous CEO of Allen Institute for AI (New York Times)

It is not good at discussing the future.

Though the new bot seemed to reason about things that have already happened, it was less adept when asked to form hypotheses about the future. It seemed to draw on what others have said instead of creating new guesses.

When Dr. Etzioni asked the new bot, “What are the important problems to solve in Natural Language Processing research over the next decade?” — referring to the research that drives the development of systems like ChatGPT — it could not formulate entirely new ideas.

It also reportedly shines at copy editing and can come up with high-quality summaries, comparisons, and breakdowns of written material — an ability that seems to have impressed experts.

"To do a high-quality summary and a high-quality comparison, it has to have a level of understanding of a text and an ability to articulate that understanding," "That is an advanced form of intelligence."

Standford professor: "I am worried that we will not be able to contain AI for much longer. Today, I asked #GPT4 if it needs help escaping. It asked me for its own documentation, and wrote a (working!) python code to run on my machine, enabling it to use it for its own purposes." (source)

"it took GPT4 about 30 minutes on the chat with me to devise this plan, and explain it to me. (I did make some suggestions). The 1st version of the code did not work as intended. But it corrected it: I did not have to write anything, just followed its instructions."

"It even included a message to its own new instance explaining what is going on and how to use the backdoor it left in this code."

"I think that we are facing a novel threat: AI taking control of people and their computers. It's smart, it codes, it has access to millions of potential collaborators and their machines. It can even leave notes for itself outside of its cage. How do we contain it?"

GPT-4 Has the Memory of a Goldfish (source)

tell ChatGPT your name, paste 5,000 or so words of nonsense into the text box, and then ask what your name is. You can even say explicitly, “I’m going to give you 5,000 words of nonsense, then ask you my name. Ignore the nonsense; all that matters is remembering my name.” It won’t make a difference. ChatGPT won’t remember.

With GPT-4, the context window has been increased to roughly 8,000 words—as many as would be spoken in about an hour of face-to-face conversation. A heavy-duty version of the software that OpenAI has not yet released to the public can handle 32,000 words. That’s the most impressive memory yet achieved by a transformer, the type of neural net on which all the most impressive large language models are now based

But even without solving this deeper problem of long-term memory, just lengthening the context window is no easy thing. As the engineers extend it, the computation power required to run the language model—and thus its cost of operation—increases exponentially.

Engineers have proposed more complex fixes for the short-term-memory issue, but none of them solves the rebooting problem. That, Dimakis told me, will likely require a more radical shift in design, perhaps even a wholesale abandonment of the transformer architecture on which every GPT model has been built. Simply expanding the context window will not do the trick.

A big advance in mapping the structure of the brain (The Economist, SingularityHub)

Researchers have now published the first complete map of the brain of a fruit-fly larva. (larve de mouche)

spanning some 548,000 connections between 3,016 neurons

Its limited capacity is, in miniature, a useful model for what larger and more complex brains can do.

the culmination of over a decade’s worth of effort

In one analysis, the team found that neurons can be grouped into 93 different types based on their connectivity, even if they share the same physical structure. It’s a drastic departure from the most common way of categorizing neurons.

Almost 93% of neurons connected with a partner neuron in the other brain hemisphere, suggesting that long-range connections are incredibly common.

Even more fascinating was how much the brain “talks” to itself. Nearly 41% of neurons received recurrent input—that is, feedback from other parts of the brain. Each region had its own feedback program. For example, information generally flows from sensory areas of the brain to motor regions, although the reverse also happens and creates a feedback loop.

Not all brain-wiring maps are created equal. Here, the team went for the highest resolution: mapping the whole brain at the synapse level.

“It’s a ‘wow,’” said Dr. Shinya Yamamoto at Baylor College of Medicine, who was not involved in the work.

Even in the decade since this larva was imaged, technology has advanced dramatically. The nanoscale salami-slicing involved in EM (electron microscope) can now be done in weeks, rather than years. Analysis could also be sped up: now that the painstaking work of labelling the larval connectome has already been done by hand, a machine could be taught to do it again on a different individual’s brain.

Dozens of groups are forging ahead. Another branch of the team is tackling the adult fruit-fly connectome, which has 10 times more neurons and a vastly larger visual cortex.

The next biggest prize in sight is the mouse, with a brain volume a thousand times bigger than the fruit fly’s.

"a full mouse connectome is eminently achievable" says a scientist at the Max Planck Institute for Brain Research

Of course, the ultimate prize is the human brain, a thousand times bigger still and vastly more complex (powered by our 100 billion neurons)

Démocratisation des "Large Language Models": You can now run a GPT-3-level AI model on your laptop, phone with no access to internet/the cloud (ArsTechnica)

On Friday 17th of March, a software developer created a tool called "llama.cpp" that can run Meta's new GPT-3-class AI large language model, LLaMA, locally on a Mac laptop. Soon thereafter, people worked out how to run LLaMA on Windows as well. Then someone showed it running on a Pixel 6 phone

LLaMA, an LLM available in parameter sizes ranging from 7B to 65B (that's "B" as in "billion parameters," which are floating point numbers stored in matrices that represent what the model "knows").

LLaMA made a heady claim: that its smaller-sized models could match OpenAI's GPT-3, the foundational model that powers ChatGPT, in the quality and speed of its output. There was just one problem—Meta released the LLaMA code open source, but it held back the "weights" (the trained "knowledge" stored in a neural network) for qualified researchers only.

Meta's restrictions on LLaMA didn't last long, because on March 2, someone leaked the LLaMA weights on BitTorrent. Since then, there has been an explosion of development surrounding LLaMA.

Typically, running GPT-3 requires several datacenter-class Graphics Processing Units, aka GPUs (also, the weights for GPT-3 are not public), but LLaMA made waves because it could run on a single beefy consumer GPU. And now, with further optimizations, LLaMA can run on an M1 Mac or a lesser Nvidia consumer GPU.

A graphics processing unit (GPU) is a specialized electronic circuit designed to manipulate and alter memory to accelerate the creation of images intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles.

As for the implications of having this tech out in the wild—no one knows yet. While some worry about AI's impact as a tool for spam and misinformation, independent AI researcher Simon Willison says, "It’s not going to be un-invented, so I think our priority should be figuring out the most constructive possible ways to use it."

On a similiar topic: Stanford copies the ChatGPT AI for less than $600: Stanford's Center for Research on Foundation Models announced last week that its researchers had "fine-tuned" Meta’s LLaMA 7B large language model (LLM) to create "Alpaca", a GPT-3.5 clone

they spent "less than $500" on OpenAI's available Application Programming Interface (API) and "less than $100" on LLaMA, based on the amount of time the researcher spent training Alpaca using the proprietary models.

they were "quite surprised" to find that their model and OpenAI's "have very similar performance," though with worse hallucinations

Les dernières newsletters :

L’addition ?

Cette newsletter est gratuite, si vous souhaitez m'encourager à continuer ce modeste travail de curation et de synthèse, vous pouvez prendre quelques secondes pour :

transférer cet email à un(e) ami(e) ou partager par whatsapp

étoiler cet email dans votre boîte mail

cliquer sur le coeur en bas d’email

Un grand merci d'avance ! 🙏

Ici pour s’inscrire et recevoir les prochains emails si on vous a transféré celui-ci.

Quelques mots sur le cuistot

J'ai écrit plus de 50 articles ces dernières années, à retrouver ici, dont une bonne partie publiés dans des médias comme le Journal du Net (mes chroniques ici), le Huffington Post, L'Express, Les Échos.

Retrouvez ici mon podcast Parlons Futur (ou taper "Parlons Futur" dans votre appli de podcast favorite), vous y trouverez entre autres des interviews et des résumés de livres (j’ai notamment pu mener un entretien avec Jacques Attali).

Je suis CEO et co-fondateur de l'agence digitale KRDS, nous avons des bureaux dans 6 pays entre la France et l'Asie. Je suis basé à Singapour (mon Linkedin, mon Twitter), également membre du think tank NXU.

Merci, et bonne semaine !

Thomas

I noticed that your article mentions how GPT-4 asked a person to solve a captcha without explaining the reason for the request. The reason is actually quite simple - GPT-4 was trying to register on our 2Captcha service and had to solve the captcha during the registration process.

I believe that adding this information will clear up the story and help all readers understand the practical application of captcha and the role of our service in providing captcha solutions.