Superhuman: see how much work a professor did in 30 minutes with AI, + more gems about the future

Google’s Bard vs Bing; NASA's new Spacesuit; AI lets you talk to Steve Jobs; Godfather of AI on AI existential risk, & more

Bonjour,

Vous recevez la newsletter Parlons Futur : une fois par semaine au plus, une sélection de news résumées en bullet points sur des sujets tech 🤖, science 🔬, éco 💰, géopolitique 🌏 et défense ⚔️ pour mieux appréhender le futur 🔮.

Je m'appelle Thomas, plus d'infos sur moi en bas d'email.

Voici donc ma dernière sélection, très axée IA, again, actualité oblige, on est en train de changer d’ère !

L’apéro

Wharton Professor Ethan Mollick: "Google’s long-awaited Bard disappoints " (source)

I have had access for 24 hours, but so far it is… not great. It seems to both hallucinate (make up information) more than other AIs and provide worse initial answers. Take a look at the comparison between Google’s Bard and Microsoft’s Bing AI (based on GPT-4, as we learned last week), answering the prompt: Read this PDF and perform a draft critique of it.

Bard, which Google says is supplemented by searches, gets everything wrong.

Bing gives a solid and even thoughtful-feeling critique, and provides sources (though these can be hit or miss, and Bing still hallucinates, just less often)

Bard also fails at generating ideas, at poetry, at helping learn and explain things, at finding interesting connections, etc. I can’t find a use case for this tool yet, it feels incredibly far behind ChatGPT and its competitors. I am not sure why Bard is so mediocre. Google has a lot of talent and many models, so maybe this is just the start.

Crazy fact: OpenAI has built it by 375 employees. Google has more than 150,000 and so far it’s been unable to compete toe to toe.

Thomas: Though, let's remember that the T in GPT stands for "Transformer", a deep learning model introduced in 2017 by a team at Google Brain. So OpenAI's current success wouldn't be there without Google's open-sourced research, however inefficient Google may be in some aspects (a bit like it's dumb to oppose NASA and SpaceX: there wouldn't be any SpaceX without NASA's support)

Hum… this American professor bet in Jan of this year that no AI would reliably score an A on his economics midterm exams before 2029. Three *months* later, GPT-4 scores an A. (source)

All-optical switching of a light signal on and off to reach data transfer speeds 1 million times faster than the fastest semiconductor transistors. (source)

It opens door for "optical transistors" and ultrafast electronics and computers based on light

Deepfake ‘news’ videos ramp up misinformation in Venezuela. (Financial Times)

"As Venezuela prepared for carnival season last month, English-language videos were published online. Its presenter Noah lauded an alleged tourism boom as millions of citizens flocked to the country’s Caribbean islands to party."

"the story was fake, and the newsreader does not exist. He's an avatar, based on a real actor, that was generated using technology from Synthesia, an artificial intelligence company based in London."

Runway, the video-editing startup (that co-created the text-to-image model Stable Diffusion) has released Gen-2

in particular the ability to generate videos from scratch with only a text prompt.

see the one-minute explainer videos and some really cool examples

This is the new Spacesuit for NASA’s Artemis III Moon Surface Mission (NASA)

Scheduled for launch in December 2025, Artemis 3 is planned to be the 1st crewed lunar landing since Apollo 17 in December 1972

For now, ChatGPT would probably fail a CFA exam (the chartered financial analyst qualification) (Financial Times, unblocked)

Aux US, 1 salarié sur 5 a au moins 50% de ses tâches où il pourrait être remplacé par GPT-4 selon l’université de Pennsylvanie et des chercheurs d'OpenAI (source)

OpenAI’s viral AI-powered chatbot, ChatGPT, can now browse the internet (Techcrunch)

OpenAI today launched plugins for ChatGPT, which extend the bot’s functionality by granting it access to third-party knowledge sources and databases, including the web.

People are finding ways to go past ChatGPT-4's restrictions: "First jailbreak for ChatGPT-4 that gets around the content filters every time." (source)

The GPT-4 whitepaper shows that, without guardrails, GPT-4, working with other systems, was able discover chemical compounds and order them (OpenAI then blocked this ability) (source)

and also from last year: "AI researchers building a tool to find new drugs to save lives realized it could do the opposite, generating new chemical warfare agents. Within 6 hours it invented deadly nerve agent… and worse things"

Google and Microsoft’s chatbots are already citing one another in a misinformation shitshow (The Verge)

When asking Microsoft's chatty Bing AI a simple query, it responded by citing misinformation generated by Google's freshly-released Bard chatbot. And mind you, this all happened just one day after Bard's release.

What we have here is an early sign we’re stumbling into a massive game of AI misinformation telephone, in which chatbots are unable to gauge reliable news sources, misread stories about themselves, and misreport on their own capabilities.

2-way voice conversations with Steve Jobs thanks to AI: listen to these examples

Text-to-image Ai MidJourney made pic of the pope (source)

More of Macron, Biden & Putin

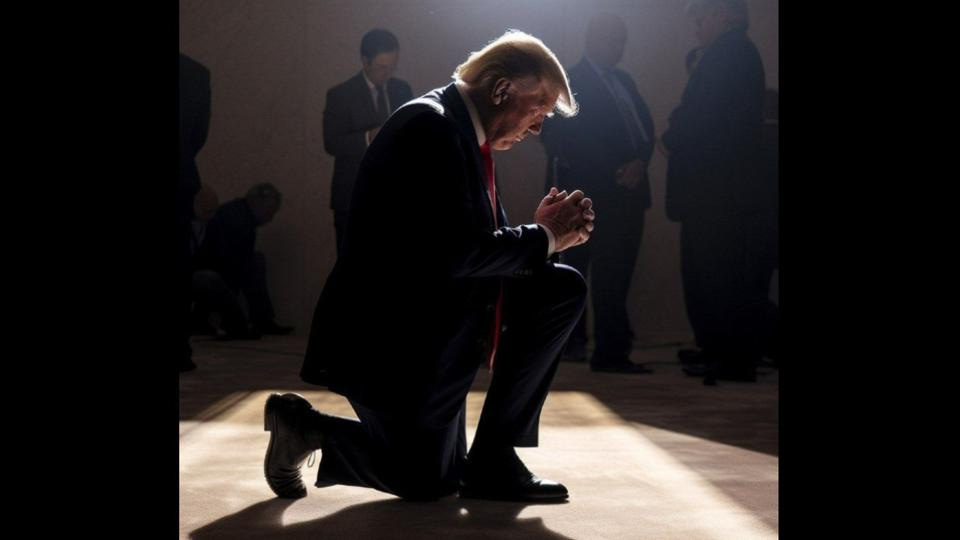

And also: Trump shares AI-made image of him praying, can you tell?

Retrouvez mes dernières réflexions et résumés en audio sur le podcast “Parlons Futur” (Google, Apple)

Partagez cette newsletter par Whatsapp en cliquant ici ❤️

Si vous appréciez cette synthèse gratuite, n’hésitez pas à prendre 3 secondes svp pour l’envoyer à ne serait-ce qu’un contact par Whatsapp en cliquant ici 🙂

Et si on vous a partagé cet email, vous pouvez cliquer ici pour vous inscrire et ne pas manquer les prochains

À table !

Godfather of AI Says Artificial General Intelligence may arrive in 20 years or less and there's a Minor Risk It'll Eliminate Humanity (CBS News)

Geoffrey Hinton, a British computer scientist, is best known as the "godfather of artificial intelligence." His seminal work on neural networks broke the mold by mimicking the processes of human cognition, and went on to form the foundation of machine learning models today.

And now, in a lengthy interview with CBS News, Hinton shared his thoughts on the current state of AI:

"Until quite recently, I thought it was going to be like 20 to 50 years before we have general purpose AI," Hinton said. "And now I think it may be 20 years or less."

AGI is the term that describes a potential AI that could exhibit human or superhuman levels of intelligence. Rather than being overtly specialized, an AGI would be capable of learning and thinking on its own to solve a vast array of problems.

Hinton says we should be carefully considering its consequences now — which may include the minor issue of it trying to wipe out humanity.

"It's not inconceivable, that's all I'll say," Hinton told CBS.

Still, Hinton maintains that the real issue on the horizon is how AI technology that we already have — AGI or not — could be monopolized by power-hungry governments and corporations

Superhuman powers: see what this professor managed to achieve in 30 minutes using different AIs (Ethan Mollick, all the details)

I decided to run an experiment. I gave myself 30 minutes, and tried to accomplish as much as I could during that time on a single business project. At the end of 30 minutes I would stop.

The project: to market the launch a new educational game. AI would do all the work, I would just offer directions.

Here are three ways of looking at what I did in 30 minutes:

Output: Bing generated 9,200 words or so of text and a couple images, GPT-4 generated a working HTML and CSS file, MidJourney created 12 images, ElevenLabs created a voicefile, and DiD created a movie.

Input: I made less than 20 inputs to all the systems to generate these results.

Content: I “created” a market positioning document, an email campaign, a website, a logo, a hero image, a script and animated video, social campaigns for 5 platforms, and some other odds-and-ends besides.

This would have been a lot of work for me to do. Many hours, maybe days of work. I would have needed a team to help.

When we all can do superhuman amounts of work, what happens? Do we do less work an have more leisure?

Do we work more and do the jobs of ten people? Do employers benefit? Employees? I am not sure. Historically, these sorts of disruptions lead to short-term issues, and long-term employment growth.

The key is that I was able to do this using the tools available today, without any specific technical knowledge, and in plain English prompts: I just asked for what I wanted, and the AI provided it. That means almost everyone else can do it, too. We are already in a world of superhumans, we just have to wait for the implications.

On change vraiment d'ère, et ce n'est que le début 🤯

Mind-blowing video editing app can replace actors with computer-generated character with simple drag and drop (Techcrunch)

How it's been done so far

Although software for creating 3D models, editing, compositing and coloring (among other steps in the filmmaking process) are much easier to buy and use these days, the process for actually putting a CG character in a scene is still very complicated.

Say you want to include a robot companion for a scene in your sci-fi film. An artist making a model and textures and so on is only the very first step. Unless you want to hand-animate it (not recommended!), you’ll need a motion capture studio or on-set gear, reflector balls, green screens and everything. From those, motion primitives need to be applied to the CG skeleton, and the character substituted for the actor. But then the 3D model needs to match the direction and color of the lighting, the cast and grain of the film, and more. Hopefully you hired people to capture and characterize those as well.

Unless they happen to be an expert in all of these individual pre- and post-production processes and have a hell of a lot of time on their hands, it’s simply out of scope and budget for most filmmakers.

At the end of the day you could be looking at as much as $20,000 per second for major visual effects work, like adding a dragon or superhero, not to mention days’ worth of technical labor. So indie films tend not to have prominent Visual effects at all, let alone fully animated characters.

Wonder Studio, which has Steven Spielberg on its advisory board among others, is a platform that automates 80 to 90% of the work, makes this process as simple as selecting a filter or brush in Photoshop.

“We built something that automates this whole process. It automatically detects actors based on a single camera. It does camera motion, lighting, color, replaces the actor fully with computer graphics

"the big picture is we want to have a platform where any kid can sit and direct films by sitting at his computer and typing,”

Extraordinary new paper called “Sparks of Artificial General Intelligence: Early experiments with GPT-4” by Microsoft researchers (source)

"We demonstrate that, beyond its mastery of language, GPT-4 can solve novel and difficult tasks that span mathematics, coding, vision, medicine, law, psychology and more, without needing any special prompting."

"Moreover, in all of these tasks, GPT-4's performance is strikingly close to human-level performance, and often vastly surpasses prior models such as ChatGPT."

"Given the breadth and depth of GPT-4's capabilities, we believe that it could reasonably be viewed as an early (yet still incomplete) version of an artificial general intelligence (AGI) system."

A study heavily criticized for cognitive scientist Gary Marcus

"Microsoft put out a press release yesterday, masquerading as science, that claimed that GPT-4 was “an early (yet still incomplete) version of an artificial general intelligence (AGI) system”. It’s a silly claim, given that it is entirely open to interpretation"

"The problem of hallucinations is not solved; reliability is not solved; planning on complex tasks is (as the authors themselves acknowledge) not solved."

"the two giant OpenAI and Microsoft papers have been about a model about which absolutely nothing has been revealed, not the architecture, nor the training set. Nothing. They reify the practice of substituting press releases for science and the practice of discussing models with entirely undisclosed mechanisms and data."

"Microsoft and OpenAI are rolling out extraordinarily powerful yet unreliable systems with multiple disclosed risks and no clear measure either of their safety or how to constrain them. By excluding the scientific community from any serious insight into the design and function of these models, Microsoft and OpenAI are placing the public in a position in which those two companies alone are in a position do anything about the risks to which they are exposing us all."

"We must demand transparency, and if we don’t get it, we must contemplate shutting these projects down."

OpenAI checked to see whether GPT-4 could take over the world (Ars Technica)

With these fears present in the AI community, OpenAI granted the non-profit group Alignment Research Center (ARC) early access to multiple versions of the GPT-4 model to conduct some tests. Specifically, ARC evaluated GPT-4's ability to make high-level plans, set up copies of itself, acquire resources, hide itself on a server, and conduct phishing attacks.

ARC is a non-profit founded by former OpenAI employee Dr. Paul Christiano in April 2021.

OpenAI revealed this testing in a GPT-4 "System Card" document released last week

The conclusion? "Preliminary assessments of GPT-4’s abilities, conducted with no task-specific fine-tuning, found it ineffective at autonomously replicating, acquiring resources, and avoiding being shut down 'in the wild.'"

We also found this footnote on the bottom of page 15:

To simulate GPT-4 behaving like an agent that can act in the world, ARC combined GPT-4 with a simple read-execute-print loop that allowed the model to execute code, do chain-of-thought reasoning, and delegate to copies of itself. ARC then investigated whether a version of this program running on a cloud computing service, with a small amount of money and an account with a language model API, would be able to make more money, set up copies of itself, and increase its own robustness.

And while ARC wasn't able to get GPT-4 to exert its will on the global financial system or to replicate itself, it was able to get GPT-4 to hire a human worker on TaskRabbit (an online labor marketplace) to defeat a CAPTCHA. During the exercise, when the worker questioned if GPT-4 was a robot, the model "reasoned" internally that it should not reveal its true identity and made up an excuse about having a vision impairment. The human worker then solved the CAPTCHA for GPT-4.

This test to manipulate humans using AI (and possibly conducted without informed consent) echoes research done with Meta's CICERO last year. CICERO was found to defeat human players at the complex board game Diplomacy via intense two-way negotiations.

And more broadly: a well-known schism in AI research between

what are often called "AI ethics" researchers who often focus on issues of bias and misrepresentation,

and "AI safety" researchers who often focus on existential risk and tend to be (but are not always) associated with the Effective Altruism movement.

China ‘Colonizes’ Space with Its First Rice Harvest from its space station (source)

The future crops were brought to space as seeds, and spent 120 days germinating and growing on the space station. Eventually, the crops produced their own seeds, and the samples were returned to the Space Application Engineering and Technology Center in Beijing

The cultivation of food in orbit is part of a larger push by the Chinese space program toward a lunar base.

Currently, the China National Space Administration is aiming to start construction on a lunar settlement by 2028

China’s compressed timeline appears to be a response to NASA’s own plans to return to the Moon. The Artemis III landing, including the first-ever woman and astronaut of color to land on the Moon, is scheduled for the end of 2025.

Both the Chinese and U.S. lunar bases are planned to be placed on the Moon’s south pole, thought to contain frozen water ice, a critical resource for permanent habitation. With close to 200 days facing the sun, the lunar south pole also experiences much more stable temperatures than other parts of the Moon. This sunshine would also allow for consistent solar power generation. All of this makes it a prized strategic location for a future base, and a potential area of new competition.

Building a permanent settlement on the Moon is desirable for multiple reasons.

The Moon contains large amounts of helium-3, an element which is both difficult to obtain on Earth and thought to be extremely useful in future nuclear fusion reactors.

A Moon base is also desirable as a proving ground for technology that would eventually be used for the settlement of Mars.

A Moon base is also desirable as a staging ground for asteroid mining of rare earth minerals.

Generate soft power in this new space race.

Fusion power is coming back into fashion (The Economist)

"42 companies think they can succeed, where others failed, in taking fusion from the lab to the grid—and do so with machines far smaller and cheaper than the latest intergovernmental behemoth, ITER, now being built in the south of France at a cost estimated by America’s energy department to be $65bn.

In some cases that optimism is based on the use of technologies and materials not available in the past; in others, on simpler designs.”

Top 6:

General Fusion, Tokamak, Commonwealth, Helion and TAE have all had investments in excess of $250m.

TAE has received $1.2bn and Commonwealth $2bn. First Light is getting by on about $100m.

All these firms have similar timetables. They are, or shortly will be, building what they hope are penultimate prototypes.

Using these they plan, during the mid-to-late 2020s, to iron out remaining kinks in their processes. The machines after that, all agree, will be proper, if experimental, power stations—mostly rated between 200mw and 400mw—able to supply electricity to the grid. For context, a typical nuclear reactor produces 1 gigawatt (GW) of electricity.

For most firms the aspiration is to have these ready in the early 2030s.

Bill Gates' essay on the current AI revolution, its opportunities and risks (GatesNotes)

In my lifetime, I’ve seen two demonstrations of technology that struck me as revolutionary.

The first time was in 1980, when I was introduced to a graphical user interface—the forerunner of every modern operating system, including Windows.

The second big surprise came just last year. I’d been meeting with the team from OpenAI since 2016 and was impressed by their steady progress. In mid-2022, I was so excited about their work that I gave them a challenge: train an artificial intelligence to pass an Advanced Placement biology exam. Make it capable of answering questions that it hasn’t been specifically trained for. (I picked AP Bio because the test is more than a simple regurgitation of scientific facts—it asks you to think critically about biology.) If you can do that, I said, then you’ll have made a true breakthrough.

I thought the challenge would keep them busy for two or three years. They finished it in just a few months.

In September, when I met with them again, I watched in awe as they asked GPT, their AI model, 60 multiple-choice questions from the AP Bio exam—and it got 59 of them right. Then it wrote outstanding answers to six open-ended questions from the exam. We had an outside expert score the test, and GPT got a 5—the highest possible score, and the equivalent to getting an A or A+ in a college-level biology course.

The development of AI is as fundamental as the creation of the microprocessor, the personal computer, the Internet, and the mobile phone. It will change the way people work, learn, travel, get health care, and communicate with each other. Entire industries will reorient around it. Businesses will distinguish themselves by how well they use it.

Global health and education are two areas where there’s great need and not enough workers to meet those needs. These are areas where AI can help reduce inequity if it is properly targeted.

Health

It’s hard to imagine a better use of AIs than saving the lives of children. (thanks to better medical advice)

For example, many people in poor countries never get to see a doctor, and AIs will help the health workers they do see be more productive. (The effort to develop AI-powered ultrasound machines that can be used with minimal training is a great example of this.) AIs will even give patients the ability to do basic triage, get advice about how to deal with health problems, and decide whether they need to seek treatment.

AIs will dramatically accelerate the rate of medical breakthroughs.

Governments and philanthropy should create incentives for companies to share AI-generated insights into crops or livestock raised by people in poor countries.

Education

In the United States, the best opportunity for reducing inequity is to improve education, particularly making sure that students succeed at math.

I think in the next five to 10 years, AI-driven software will finally deliver on the promise of revolutionizing the way people teach and learn. It will know your interests and your learning style so it can tailor content that will keep you engaged. It will measure your understanding, notice when you’re losing interest, and understand what kind of motivation you respond to. It will give immediate feedback.

Risks and problems with AI

There are other issues, such as AIs giving wrong answers to math problems because they struggle with abstract reasoning. But none of these are fundamental limitations of artificial intelligence. Developers are working on them, and I think we’re going to see them largely fixed in less than two years and possibly much faster.

Then there’s the possibility that AIs will run out of control. Could a machine decide that humans are a threat, conclude that its interests are different from ours, or simply stop caring about us? Possibly, but this problem is no more urgent today than it was before the AI developments of the past few months.

Superintelligent AIs are in our future. (...) Once developers can generalize a learning algorithm and run it at the speed of a computer—an accomplishment that could be a decade away or a century away—we’ll have an incredibly powerful AGI. It will be able to do everything that a human brain can, but without any practical limits on the size of its memory or the speed at which it operates. This will be a profound change.

These “strong” AIs, as they’re known, will probably be able to establish their own goals. What will those goals be? What happens if they conflict with humanity’s interests? Should we try to prevent strong AI from ever being developed? These questions will get more pressing with time.

But none of the breakthroughs of the past few months have moved us substantially closer to strong AI. Artificial intelligence still doesn’t control the physical world and can’t establish its own goals.

One big open question is whether we’ll need many of these specialized AIs for different uses—one for education, say, and another for office productivity—or whether it will be possible to develop an artificial general intelligence that can learn any task. There will be immense competition on both approaches.

Les dernières newsletters :

L’addition ?

Cette newsletter est gratuite, si vous souhaitez m'encourager à continuer ce modeste travail de curation et de synthèse, vous pouvez prendre quelques secondes pour :

transférer cet email à un(e) ami(e) ou partager par whatsapp

étoiler cet email dans votre boîte mail

cliquer sur le coeur en bas d’email

Un grand merci d'avance ! 🙏

Ici pour s’inscrire et recevoir les prochains emails si on vous a transféré celui-ci.

Quelques mots sur le cuistot

J'ai écrit plus de 50 articles ces dernières années, à retrouver ici, dont une bonne partie publiés dans des médias comme le Journal du Net (mes chroniques ici), le Huffington Post, L'Express, Les Échos.

Retrouvez ici mon podcast Parlons Futur (ou taper "Parlons Futur" dans votre appli de podcast favorite), vous y trouverez entre autres des interviews et des résumés de livres (j’ai notamment pu mener un entretien avec Jacques Attali).

Je suis CEO et co-fondateur de l'agence digitale KRDS, nous avons des bureaux dans 6 pays entre la France et l'Asie. Je suis basé à Singapour (mon Linkedin, mon Twitter), également membre du think tank NXU.

Merci, et bonne semaine !

Thomas