🚀 World's fastest shoes, machine reads mind at a distance, portez ce pull et échappez à l'IA, image-to-music & more

Amazing text-to-video, prompt battles, AI creates "drone" shots from your phone footage" & more

Bonjour,

Vous recevez la newsletter Parlons Futur : une fois par semaine au plus, une sélection de news résumées en bullet points sur des sujets tech 🤖, science 🔬, éco 💰, géopolitique 🌏 et défense ⚔️ pour mieux appréhender le futur 🔮.

Je m'appelle Thomas, plus d'infos sur moi en bas d'email.

Voici donc ma dernière sélection !

L’apéro

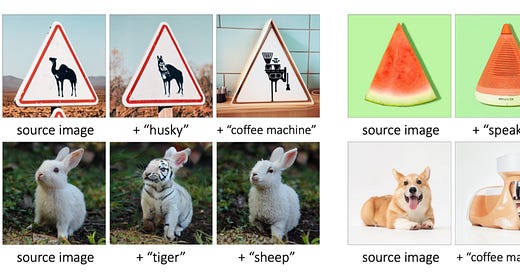

New fun AI tool: upload a source image, suggest a word, and the tool will update the image under the influence of the word

share a pic of a piece of watermelon, enter "lamp", and it will generate a lamp with an air of watermelon

Many more examples here

This sweater developed by the University of Maryland is an invisibility cloak against AI. It uses "adversarial patterns" to stop AI from recognising the person wearing it. See the AI getting confused in that 1-min video

Après les Rap Battles, maintenant on a des soirées "Prompt Battle" : "Its like a rap battle but with keyboards and DALL-E access

Same fierce competition and vibrant energy as a real battle" (pour rappel DALL-E est un outil qui génère une image originale grâce à l'IA sur la base de qq mots de texte)

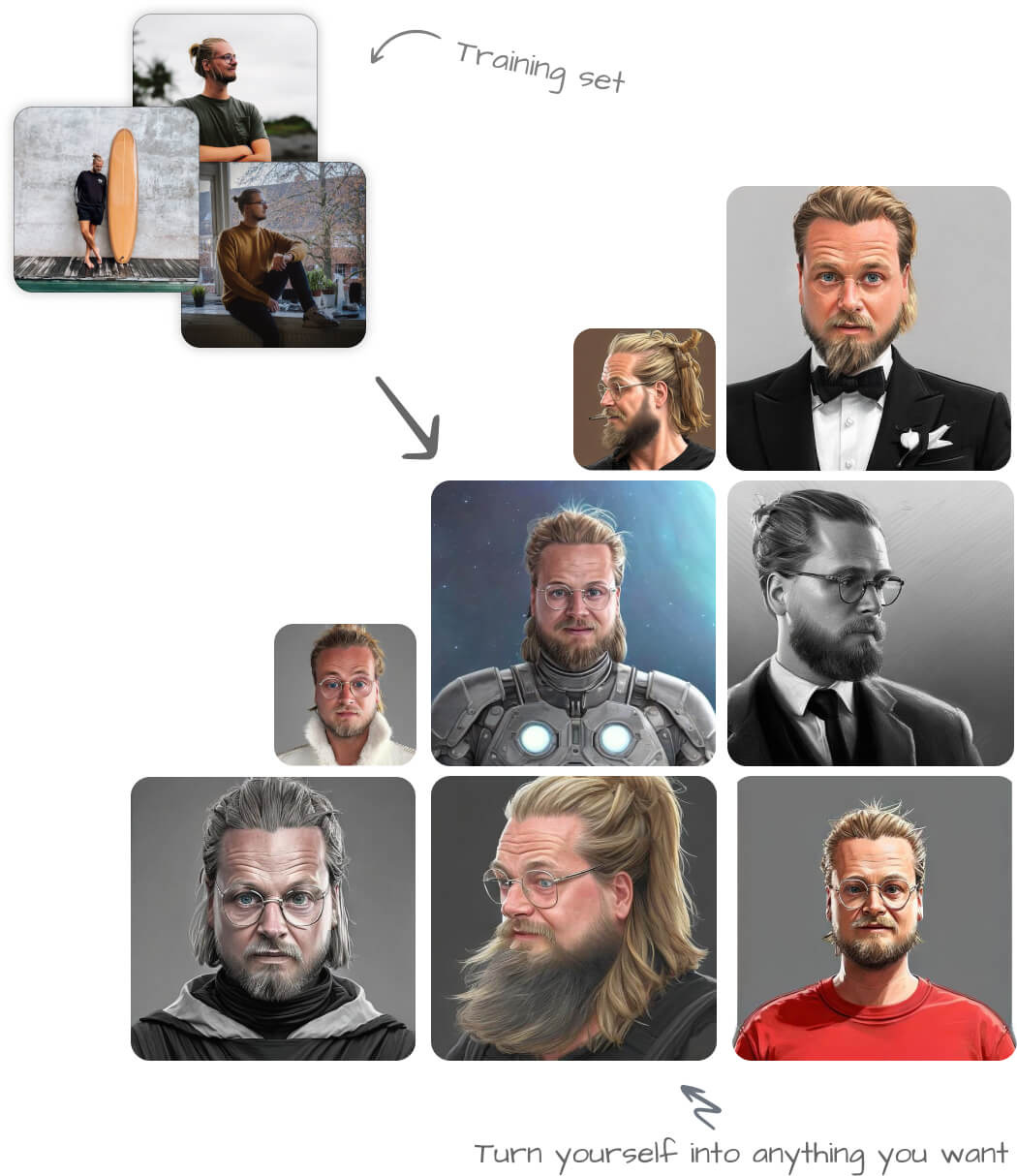

New AI tools to create original avatar pics are all the rage:

profilepicture.ai : Upload at least 10 photos, Our AI will start training for up to 3 hours, and for $34 Get more than hundred new profile pictures (Works for humans, cats and dogs, Full HD quality 2048x2048)

photoai.me: AI will create photos of you based on the pack you choose. Just upload ~10 photos of yourself (after you pay), our AI will train on those, and we’ll deliver 10 new photos of you within 12-24hrs. Several packs at $25 each: Linkedin pack, Tinder pack, Celeb pack

Upload a few pics to this tool avatarai.me, and for $40, get 100 avatar pics in many different styles

Rewind: "the search engine for your life", a macOS app that enables you to find anything you’ve seen, said, or heard. this tech is only possible now thanks to the latest Apple chip (la démo vidéo de 2 min)

And yet another promising AI tool : it creates key insights of a podcast episode with short AI-generated audio summaries, and lets you deep dive into the parts of the episode you find most interesting.

Dans le même genre : assemblyai.com "automatically summarizes audio and video files at scale with AI" : par ex un audio de 30 minutes est synthétisé à l'écrit, au choix : 5 bullet points, un paragraphe, un titre ou 3 mots 😱

Je ne l'ai pas testé, j'imagine qu'ils ne mentent pas effrontément, mais même si encore imparfait, imaginons juste dans 5 ans où on en sera, quelle époque dingue !

ça paraît inévitable : bientôt il y aura des outils pour traduire un texte, un discours en temps réel, dans la langue de son choix, dans le style de son choix (soutenu, familier, en prose, en vers, en alexandrins why not, etc.), avec l'accent de son choix, résumé ou pas et si résumé, avec le degré de concision de son choix, avec le timbre de voix de la personne de son choix, prononcé par la représentation photo réaliste et tridimensionnelle de la personne de son choix, etc.

J'écrivais dans cette tribune Athènes 2.0, ou quelle place pour l’Homme dans un monde de machines ? dans le Journal du Net en 2013 : "On pourra ainsi faire dire ce que l’on veut à un Louis de Funès en 3D plus vrai que nature, qui prononcera son texte comme il aurait pu le faire de son vivant."

This tool can generate music from an image. One example. Similar idea, someone on Twitter suggested "Put in the book, get a soundtrack that plays while you read it, inspired by the content."

Exemple d'utilisation de l'IA génératrice de texte GPT-3 dans une Google spreadsheet : l'IA prend le nom d'un invité en colonne 1, une anecdote à mentionner absolument en colonne 2 et génère le texte d'une "thank you card" originale en colonne 3 🤨 (source avec d'autres exemples)

Cet autre outil transforme des vidéos prises avec votre smartphone au sol en une vidéo crédible des lieux qui aurait été prise du ciel depuis un drone "Now you can create "drone" shots from your phone footage" (la démo de 17 secondes)

L'analyste Benedict Evans sur le métaverse :

Making a device better does not necessarily make it universal. Most obviously, we’ve been applying Moore’s Law to games consoles for 40 years or so, and they’ve got a lot better but most people don’t care.

A Playstation 5 is objectively amazing, but the global installed base of games consoles is flat at only about 175m units and it should now be clear that adding even better graphics- another decade of Moore’s Law - isn’t going to change that. Most people simply aren’t interested in that kind of experience no matter how much Moore’s Law Sony and Microsoft throw at them.

VR demos of industrial designers or heart surgeons looking at 3D models are cool, but most people’s work isn't in 3D either

We can’t know whether the metaverse will gain mass adoption in advance. A lot of very clever people did not realise that mobile would replace PCs as the centre of tech, so check back in a decade to find out. But the test is that for VR and AR to matter, we need to do things where 3D matters, whereas mobile did not have to create mobile things.

Je dirais qu'a minima pour la communication cela deviendra irrésistible (ma synthèse d'il y a 2 semaines suite aux annonces de Meta/Facebook)

Google’s text-to-video AI is amazing : see the video it generated for "A happy elephant wearing a birthday hat walking under the sea"

Quand l'IA part dans un délire : "Salmon in a river" (source)

À votre bon coeur ❤️

Si vous appréciez cette synthèse gratuite, n’hésitez pas à prendre 3 secondes svp pour l’envoyer à ne serait-ce qu’un contact 🙂

Et si on vous a partagé cet email, vous pouvez cliquer ici pour vous inscrire et ne pas manquer les prochains

À table !

The Economist titre aujourd’hui "Goodbye 1.5°C" : Global warming cannot be limited to 1.5°C: The world is missing its lofty climate targets. Time for some realism

The world is already about 1.2°C hotter than it was in pre-industrial times. Given the lasting impact of greenhouse gases already emitted, and the impossibility of stopping emissions overnight, there is no way Earth can now avoid a temperature rise of more than 1.5°C.

There is still hope that the overshoot may not be too big, and may be only temporary, but even these consoling possibilities are becoming ever less likely.

Overshooting 1.5°C does not doom the planet. But it is a death sentence for some people, ways of life, ecosystems, even countries.

If the rich world allows global warming to ravage already fragile countries, it will inevitably end up paying a price in food shortages and proliferating refugees.

The world needs to be more pragmatic, and face up to some hard truths:

1: cutting emissions will require much more money. Global investment in clean energy needs to triple from today’s $1trn a year, and be concentrated in developing countries, which generate most of today’s emissions.

2: fossil fuels will not be abandoned overnight

3: 1.5°C will be missed, greater efforts must be made to adapt to climate change.

Fortunately a lot of adaptation is affordable. It can be as simple as providing farmers with hardier strains of crops and getting cyclone warnings to people in harm’s way.

This is an area where even modest help from rich countries can have a big impact. Yet they are not coughing up the money they have promised to help the poorest ones adapt.

That is unfair: why should poor farmers in Africa, who have done almost nothing to make the climate change, be abandoned to suffer as it does?

Finally, having admitted that the planet will grow dangerously hot, policymakers need to consider more radical ways to cool it. Technologies to suck carbon dioxide out of the atmosphere, now in their infancy, need a lot of attention. So does “solar geoengineering”, which blocks out incoming sunlight. Both are mistrusted by climate activists, the first as a false promise, the second as a scary threat. On solar geoengineering people are right to worry. It could well be dangerous and would be very hard to govern. But so will an ever hotter world.

Tesla complètement à rebours du reste du secteur sur la conduite autonome

Sans parler du fait que leur techno est loin d'être fonctionnelle, un autre point saillant est que Tesla a longtemps expliqué que parvenir à maîtriser la conduite autonome ne nécessiterait que la digestion de flux vidéos (car nous-mêmes nous n'utilisons que nos yeux…bon et un peu nos oreilles), nul besoin en plus du LIDAR (laser imaging, detection, and ranging), une sorte de radar qui utilise le laser au lieu des ondes radio pour détecter les obstacles. Tous les concurrents l'utilisent et sont très critiques de Tesla sur ce point.

Andrej Karpathy, ancien directeur de l'IA de Tesla (il en est parti récemment et est toujours très respecté par Elon Musk), persiste et signe dans un podcast "Still no need for LIDAR, I will make a prediction, the other companies will drop it as well"

Tesla avait déjà retiré récemment de ses nouveaux modèles les radars et capteurs ultrasons, Tesla mise donc tout plus que jamais sur les seuls flux vidéos.

Et là où beaucoup de concurrents essaient aussi de cartographier à grands frais toutes les routes du monde au centimètre près, Andrej Karpathy enfonce le clou : "Tesla will not premap environments to one centimeter accuracy, Tesla just uses Google maps level of accuracy. Doing so would be a crutch (une béquille), a distraction, it costs entropy and bloat, it would be a massive dependency, humans don't need it"

Difficile de savoir qui a raison, et à vrai dire, on semble encore très loin de la conduite autonome, voir l’article suivant…

We’re now deep in the autonomy winter.

comme explique l'analyste trech Benedict Evans :

The first wave of machine leaning, from 2013 onwards, made a lot of people think that this could actually work, and it certainly got us 90% of the way there, but it now seems fairly clear that having a car with no steering wheel that can drive across the country might be generations away, and will certainly take longer and cost far more than people hoped

à noter : Argo, the Ford/Volkswagen autonomy Joint Venture with a $1 billion investment is shutting down (Techcrunch, engadget).

Ford said that it made a strategic decision to shift its resources to developing advanced driver assistance systems, and not autonomous vehicle technology that can be applied to robotaxis.

"Profitable, fully autonomous vehicles at scale are a long way off and we won’t necessarily have to create that technology ourselves,”"

Ford CEO Jim Farley . "It's estimated that more than a hundred billion has been invested in the promise of level four autonomy," he said during the call, "And yet no one has defined a profitable business model at scale."

In short, Ford is refocusing its investments away from the longer-term goal of Level 4 autonomy (that's a vehicle capable of navigating without human intervention though manual control is still an option) for the more immediate short term gains in faster L2+ and L3 autonomy.

L2+ is today's state of the art, think Ford's BlueCruise or GM's SuperCruise technologies with hands-free driving along pre-mapped highway routes

L3 is where you get into the vehicle handling all safety-critical functions along those routes, not just steering and lane-keeping.

"Commercialization of L4 autonomy, at scale, is going to take much longer than we previously expected," Doug Field, chief advanced product development and technology officer at Ford, said during the call. "L2+ and L3 driver assist technologies have a larger addressable customer base, which will allow it to scale more quickly, and profitability."

The World's Fastest Shoes Promise to Increase Your Walking Speed up to 11km/h (source)

Developed by a team of robotics engineers who spun off their work at Carnegie Mellon University into a new company called Shift Robotics

You don't need to know how to roller skate with the Moonwalkers, you just walk.

A strap-on design allows the Moonwalkers to be used with almost any pair of shoes, and each unit features an electric motor that powers a set of wheels similar to what you’d find on a pair of inline roller skates, but much smaller, and not all in a single line, so there’s no balancing required.

Sensors monitor the user’s walking gait (la démarche) while algorithms automatically adjust the power of the motors to match, synchronized between each foot, so the added speed increases and decreases as the user walks faster or slower.

battery-powered range of about 10 km

because they’re much smaller than an electric scooter or a bike, they’re easy to keep stashed at your desk or even in a backpack when not in use.

Full retail pricing for a pair of Moonwalkers is expected to be around $1,400.

Link to the Kickstarter campaign (they have raised almost 3X what they asked for)

Belle reconversion: French ex-Arsenal player Mathieu Flamini Has a Plan to Decarbonize the Chemical Industry (Wired)

He cofounded and is now CEO of GFBiochemicals whose main product is an obscure molecule called levulinic acid, that they spent a decade figuring out how to mass produce from agricultural waste products and that offers a “plant-based” alternative to oil-derived chemicals that could be used in thousands of products, from paints to cosmetics.

Levulinic acid is a building block—a platform that can be tweaked and altered to suit the requirements of different industries. GFBiochemicals already has almost 200 patents for plant-based solvents, polyols, and plasticizers—all things that could replace substances extracted from fossil fuels, which have toxic or nonbiodegradable byproducts.

In July, the company opened a new factory near Flamini’s hometown of Marseille in an old oil-refining area, and it’s working on deals with some large multinational chemical companies.

“We’re allowing the replacement of those obsolete molecules, which are having a negative impact on the planet, with new molecules that reduce CO2 emissions and are biodegradable and nontoxic,”

“We want to be the Intel of the chemical world,” Flamini says. “We have a platform technology that allows us to go across industries, from personal care to home care to agriculture to paints and coatings.

J'ai du mal à y croire, ça paraît dingue : des chercheurs parviennent à lire dans les pensées sans méthode invasive (source)

From the scientific paper itself:

Given novel brain recordings, this decoder generates intelligible word sequences that recover the meaning of perceived speech, imagined speech, and even silent videos, demonstrating that a single language decoder can be applied to a range of semantic tasks.

Past mind-reading techniques relied on implanting electrodes deep in peoples' brains. The new method instead relies on a noninvasive brain scanning technique called functional magnetic resonance imaging (fMRI) (IRM, fait avec un scanner) to reconstruct language.

This algorithm designed by a team at the University of Texas can “read” the words that a person is hearing or thinking during a functional magnetic resonance imaging (fMRI) brain scan.

“If you had asked any cognitive neuroscientist in the world twenty years ago if this was doable, they would have laughed you out of the room”

Using such fMRI data for this type of research is difficult because it is rather slow compared to the speed of human thoughts.

Instead of detecting the firing of neurons, which happens on the scale of milliseconds, MRI machines measure changes in blood flow within the brain as proxies for brain activity; such changes take seconds.

The reason the setup in this research works is that the system is not decoding language word-for-word, but rather discerning the higher-level meaning of a sentence or thought.

The algorithm was trained with fMRI brain recordings taken as three study subjects—one woman and 2 men, all in their 20s or 30s—listened to 16 hours of podcasts and radio stories.

However, it does have some shortcomings; for example, it isn’t very good at conserving pronouns and often mixes up first- and third-person. The decoder, says Huth, “knows what’s happening pretty accurately, but not who is doing the things.”

si ce n'est que ça le problème, ça paraît déjà incroyable !

notable from a privacy point of view is that a decoder trained on one individual’s brain scans could not reconstruct language from another individual. So someone would need to participate in extensive training sessions before their thoughts could be accurately decoded.

Sam Nastase, a researcher and lecturer at the Princeton Neuroscience Institute who was not involved in the research, says using fMRI recordings for this type of brain decoding is “mind blowing,” since such data are typically so slow and noisy.

Since the decoder uses noninvasive fMRI brain recordings, it has higher potential for real-world application than do invasive methods, though the expense and inconvenience of using MRI machines is an obvious challenge.

Wow : the results reveal which parts of the brain are responsible for creating meaning. By using the decoder on recordings of specific areas such as the prefrontal cortex or the parietal temporal cortex, the team could determine which part was representing what semantic information.

Les dernières newsletters :

L’addition ?

Cette newsletter est gratuite, si vous souhaitez m'encourager à continuer ce modeste travail de curation et de synthèse, vous pouvez prendre quelques secondes pour :

transférer cet email à un(e) ami(e)

étoiler cet email dans votre boîte mail

cliquer sur le coeur en bas d’email

Un grand merci d'avance ! 🙏

Ici pour s’inscrire et recevoir les prochains emails si on vous a transféré celui-ci.

Quelques mots sur le cuistot

J'ai écrit plus de 50 articles ces dernières années, à retrouver ici, dont une bonne partie publiés dans des médias comme le Journal du Net (mes chroniques ici), le Huffington Post, L'Express, Les Échos.

Retrouvez ici mon podcast Parlons Futur (ou taper "Parlons Futur" dans votre appli de podcast favorite), vous y trouverez entre autres des interviews et des résumés de livres (j’ai notamment pu mener un entretien avec Jacques Attali).

Je suis CEO et co-fondateur de l'agence digitale KRDS, nous avons des bureaux dans 6 pays entre la France et l'Asie. Je suis basé à Singapour (mon Linkedin, mon Twitter), également membre du think tank NXU.

Merci, et bon weekend !

Thomas